Testing and the Product Cycle

In response to my last post, I had a few insightful readers ask about various types of testing and if they went beyond the single purpose of confirming expected behaviors.

I also mentioned in the last post that human QA analysts are often able to effect quality in a way that automated tests currently do not, but that such an activity might not really fall under a strict definition of “testing”.

So, even though I had planned to dig in deeper with what automated tests are good to write, and how to write them well, I think taking an even higher level look at where testing fits in with the product cycle would be really helpful context.

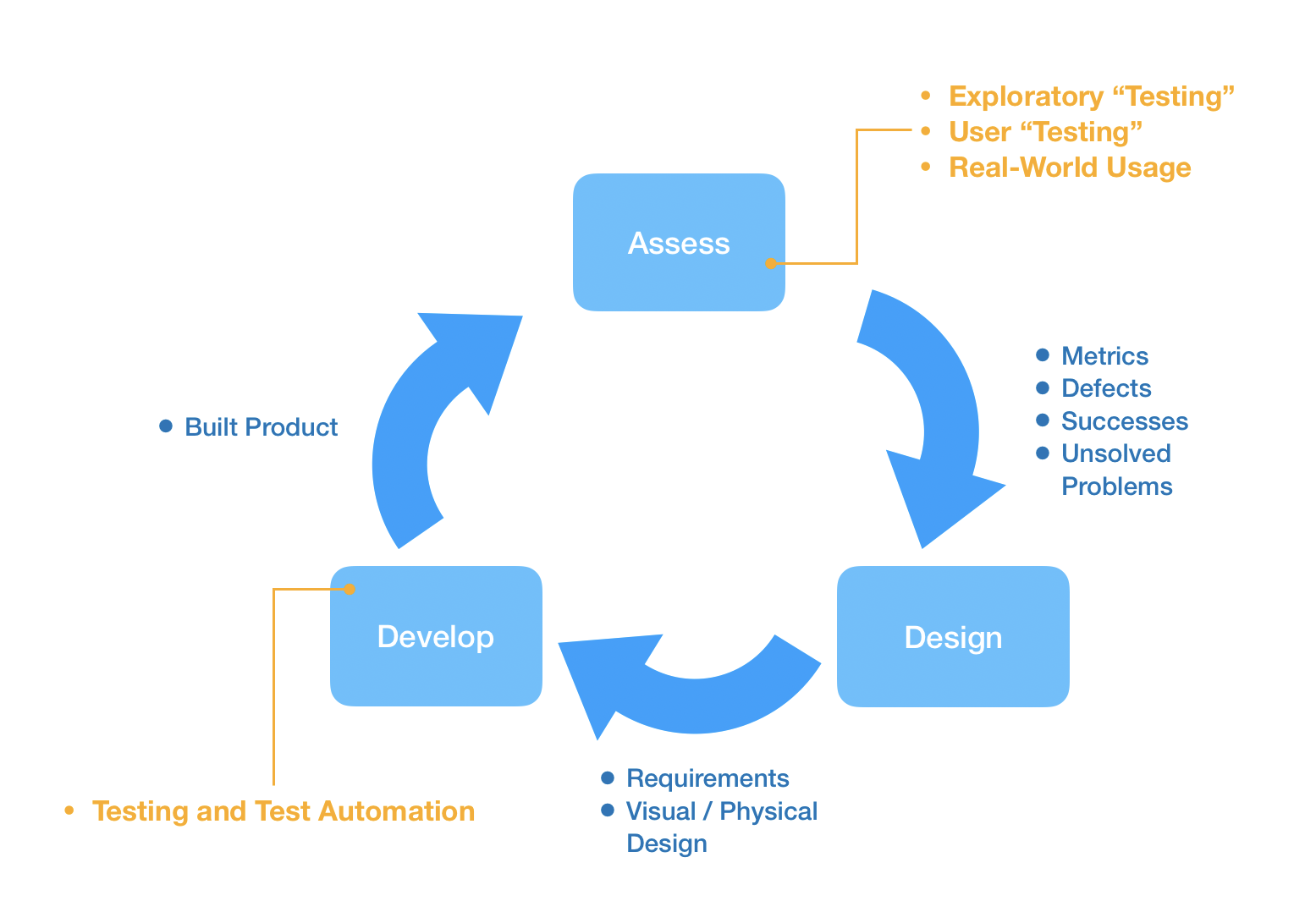

The Cycle of Assess, Design and Develop

All products — and particularly software — follow a similar pattern:

- Assess what problems need to be solved or what processes can be improved in a certain field

- Design a new or better solution for one of more of those problems or processes

- Develop (actually build) that design into a real thing — whether it’s an app, a suitcase, or a satellite.

After a product or piece of software is developed, whatever was built and released is usually assessed again to determine how effective it is as solving the intended problems, and new insights from that assessment are used to further refine the design of the product or software.

So where does testing (and automated testing) fit it to this cycle? My position is that testing (both automated and otherwise) belongs in the development stage. The purpose of testing is to validate that what was built matches what was designed.

Imagine if you designed a pair of roller skates, handed the specifications over to a factory to produce, but the factory mistakenly created shoes with sponges on the bottom instead of wheels. When you started getting negative feedback from users, you’d be a little confused as to why everyone says they can’t skate. If you never tested the product that came out of the factory against your design, you might assume that you need to add additional wheels, or change the design to use ball bearings. But if the factory continued to make mistakes, you would find that all your great fixes didn’t lead to satisfied users, and you’d probably give up in frustration.

This is one of many reasons why testing is vital to ensure that the implementation of a product matches what was designed. It means you can tell users confidently what to expect, and that the solution you conceived of is what they are actually getting.

The line between the end of the development phase (where testing happens) and the assessment or feedback phase is often blurred. This can make things confusing. Here are some examples:

Product asks for a slider on an app screen so the user can select a value between 0 and 100. The developer implements the request as specified and gives the build to Product. Product then does “acceptance testing” or gets a focus group to do “user testing” and then decides that they would rather not have a slider, but an input field that the user can just type into.

In this case, development was completed successfully, and immediately after Product confirmed the behavior was as they requested they rolled right into the assessment phase — they asked either themselves as a user (or asked actual users) to try the solution, got feedback that the slider felt fidgety, moved back around to the design phase and decided to solve the task in a different way using an input field. Then they came back to the developer with a different requirement.

Even though this will be described as “didn’t pass acceptance testing” or “didn’t do well in user testing”, this has nothing to do with testing and everything to do with feedback from real usage. The testing phase ends the moment that all expected behaviors are confirmed for the developer’s implementation, which means the product can now be assessed by users or potential users.

A developer implements a ticket that calls for adding the ability to make a video go full screen with a button, and passes it over to QA. QA tries it out and then sends it back saying “there should be a way to switch between letterboxed or not when in full screen”. Again, QA ended up doing two different things at the same time: confirming the expected behavior (button makes video go to full screen), and also evaluating as a user if they like the solution and whether they feel it can be improved. They then present a new problem that needs a solution designed for it. This goes beyond testing requirements and crosses into the assessment phase.

There’s nothing wrong with QA assessing the built product, or with developers and QA being involved in the design phase, etc. It’s actually really helpful to bring these disciplines together for all phases of the product cycle. However, teams and process will be a lot saner if each phase and iteration of the cycle are kept discrete. To do otherwise leads to “endless churn” and “scope creep” and “tickets that never close”.

In cases where QA comes up with new problems and sends them as issues directly back to the developer, it also leads to undesigned, adhoc solutions. In cases where Product changes or adds requirements during “acceptance testing”, the new or modified requirements are unusually not written down, updated in the original ticket or otherwise captured in the correct way, which is one of the dangers of mixing testing and assessment.

One of the goals of Agile is to speed up each iteration of this product cycle, to get a small increment of newly designed functionality developed, tested and in the hands of users where it can be assessed and used to guide the next designed feature (where the cycle starts again). Older methodologies (e.g. “Waterfall”) also go through these same phases, but each phase is applied to the whole project instead of just a small piece. And each phase takes months or years, meaning that a long time passes before any part of the designed solution can be assessed in the hands of users.

But in the rush to “be Agile”, product teams often forget that this whole cycle is important. In order for programmers to develop a feature, they need explicit requirements, not just vague ideas, otherwise they spend their time being designers and brainstorming product solutions instead of coding. In order to assess how well the current solution is working with users, business analysts and product people need confidence that the software is actually doing what was designed and is not in fact malfunctioning. In order to design new or better behaviors, designers and product need metrics, results from user testing, and any other information on how the product is or isn’t meeting the needs it was designed for. The key point is to keep each iteration of the cycle clearly defined and to ensure each step gets the attention and confidence it deserves.

A last note on this cycle: it’s possible and very common for the entire Assess -> Design -> Develop -> cycle to happen internally within a company or team many times before a product or update is actual released to end users. This allows for a high level of polish before something hits the market. However it is still just as important to keep some discipline around each of these phases being completed and done deliberately.

So Many Kinds of Testing

One of the reasons things can get particularly blurry is because so many things are called “testing”.

- Unit testing

- Acceptance Testing

- User Testing

- Load Testing

- Penetration Testing

- A/B Testing

- Market Testing

Where in the Assess-Design-Develop product cycle do all of these things fit in? For the purposes of software development, and this series of blog posts in particular, I am drawing a line and splitting these into two buckets:

- TESTING: Occurs at the end of the development phase. It’s purpose is to confirm expected behaviors and thus that the designed requirements are met. Includes unit testing, manual regression testing, UI testing, etc. Ideally performed automatically when changes to the code are committed. Does not discover new problems to solve or identify new requirements

- ASSESSMENT: Occurs after development is complete and the latest version of the product has been released either internally or externally. It’s purpose is to discover previously unidentified requirements, places where the designed solution is falling short or can be improved, and opportunities to solve new problems. Assessment is intended to discover new problems to solve, or identify new requirements.

This gives us a simple way to identify where in the cycle an activity belongs: if it uncovers a new problem or requirement that wasn’t previously expected and designed for, then it belongs in the Assessment phase. And even though it may be called “testing” or have “testing” in its name, for the purposes of clarity we will not be considering it part of the testing activity or suite.

Some examples of things that uncover new problems or requirements that weren’t previously expected and designed for:

- A/B Testing (used to determine if a new solution is better at serving user needs than an existing one. If so, a new requirement will arise to implement or default to the new solution).

- User Testing (used to determine if an implemented solution meets user needs, causes difficulties, or can be improved. Leads to new or refined solutions and requirements)

- Penetration Testing (used to find gaps in software that can be exploited by malicious actors. These gaps are essentially missing requirements for resilience, security, etc. that were not known about until assessment discovered a need for them)

- Fuzz Testing (using automated software to pass large, often random sets of different inputs to a program in order to see if it crashes or behaves unexpectedly).

Notice that all of these activities have the word “testing” in their name. However, none of these activities really validate that developed software is meeting known, explicit requirements. In fact, the results of any of these activities are immediately turned around into the Design phase to update or create new requirements that will improve the state of the product.

For this reason, I suggest considering them “assessments”. In fact all of these activities are often performed by outside parties, not the actual development team for a product. Development teams should be 100% responsible for validating that their code meets all defined requirements (which is what I have proposed is the sole purpose of testing), but there are numerous ways to discover new problems and requirements for the product and these frequently involve outside entities and users. So perhaps this is splitting semantic hairs, but I feel the distinction is incredibly important when building up a test suite and testing culture on a development team. The purpose of testing is to confirm expected behaviors. If some activity is doing something other than confirming expected behaviors, it is outside the scope of development and not the primary focus of a development team and their test suite.

That doesn’t mean such activities can’t be incredibly important — they obviously can be! But such practices, even though they often have the word “testing” in their name, are squarely part of the assessment phase of the product cycle, and their results should lead to the creation of new tickets, the specification of new requirements, or the design of new behaviors, not get kicked directly to developers to make immediate and undesigned modifications to the code.

And this is perhaps the most important practical difference between testing and assessment: a failing test (which should be confirming an explicit requirement) results in the code being modified immediately until the test passes. It doesn’t require new design, a new “ticket” in a project tracker or a turning of the product cycle to address. A defect uncovered thorough assessment however, should pass through the design phase and must be solved by the creation of a new explicit requirement. This will also likely be a new “ticket” in a project tracker and no code is modified or work performed until those steps are complete.

A Final Metaphor

As a final metaphor to explain the difference between what we are calling testing, and what we are calling assessment: consider how the words are used in the context of eduction. A “test” is expected to validate that you learned something you were taught or are required to know as part of a specific course. It’s reasonable to be expected to know what was explicitly part of the content of a course.

How unfair would it feel, however, if during your final for Biology 101, you were asked questions about contract law in 18th century England? That wouldn’t really be testing, would it? Because no one ever told you that such knowledge was part of Biology 101 or what you would need to know to pass it.

On the other hand, an examination that asked you a variety of questions in various subjects to find our where you were strong and where you were lacking knowledge wouldn’t be unfair. This would be considered an “assessment” and would have a different purpose than validating that you learned something that you were taught. Its purpose would instead to be to find areas where you could improve or to expose you to previously unknown subject areas that you may which to learn about.

Similarly in software, a testing suite should be focused on validating that our program “remembers” everything we explicitly taught it, and is properly executing all expected behaviors. Other “assessment” tools are used to find areas where the software should fill in gaps in its knowledge, learn useful new behaviors, or dive deeper into a certain problem space.

Confirming the Purpose of Testing

So, to come full circle to the initial question that inspired this discussion: “don’t tests do more than confirm expected behaviors”? I hope that this article has established that, for the purpose of a development team, the answer is no. Of course there are many things with the word “testing” in their name that do more than confirm expected behaviors (A/B testing, load testing, fuzz testing, user testing, etc.), but these activities are more correctly part of the assessment phase of the product cycle and not part of the development phase. And thus, for the purposes of this series of posts (which specifically address building up the best possible test suite and testing practices on a mobile development team), those activities are not in scope or particularly relevant.

Without in any way discouraging your team (especially your larger team beyond just the developers) from making full use of all tools for assessing your product or discovering new defects in it, I strongly recommend keeping your developers focused first and foremost on testing practices that confirm expected behaviors. This will be your strongest guarantee of an excellent development phase in every iteration of the product cycle, and will build confidence in what is released to your users!

Next up: Learn about the two kinds of tests that mobile developers should be writing!

You might also be interested in these articles...